ECS Anywhere: the automated wayMon, 21 Jun 2021

Finally ECS Anywhere is here and I cannot wait for EKS Anywhere to be released to the public as well.

As always I have seen a lot of posts about ECS Anywhere, but few of them where doing it in an automated way, I hate messing around with the AWS Console, everything has to be scripted, otherwise I don't feel comfortable =)

For this tutorial I have decided to use Digital Ocean as my "on-premise" VM, I am still waiting for my Intel NUC to arrive, after I will receive it, I might update this post or just do a new one.

TLDR? You can check the repo I have created to see the code.

Introduction

Before starting there are some important considerations:

The service is not free of charge: you will be charged $0.01025 per hour, however it does not matter the size of the instance or number of cores.

Services or task spawned on On-premise instance must use the

EXTERNALlaunch type.Service load balancing isn't supported. Services or tasks launched externally cannot be attached directly to an ELB, therefore they are more meant as a backend service with only outbound traffic. This is a bad limitation in my opinion, however you can still use other non-AWS load balancing solutions, like for example Big-IP ECS Controller or similar.

Capacity providers aren't supported: this is very couple with the point above and might make sense if ECS Anywhere usage is meant for only background jobs. If not you will have to implement your own solution for scaling up and down and register new external container instances.

Service discovery isn't supported

The

awsvpcnetwork mode isn't supported.Integration with App Mesh isn't supported

Services communication

So why ELB and Service Discovery are not supported? As my understanding there is a secure connection only between the ECS control plane and the agents installed in your on-premise VMs/Bare-metal. So ECS will consider your own data center as a separated network. I do not think that at this stage using VPN or Direct Connect can somehow solve this problem, I guess this is more of a service level limitation, only time will tell.

Create the cluster

In order to use ECS Anywhere I will need to create a cluster, this is very simple and I have my own pre-made custom templates to use.

One important thing that this template will do is deploy the ECSAnywhereRole which is needed to create a Systems Manager activation key pair.

Those keys are going to be used by the SSM agent to register and subsequently to allow ECS control plane to operate your external instance.

Setting up the image

This step is optional, but the first thing I would do is to create a snapshot, which we can use to provision new VMs.

For this tasks I have used Packer and Ansible, you can check the code here. The Ansible playbook is going to install some base dependencies (Docker, jq, ect...), allow SSH only and disable password login. Once you have created the snapshot you can check the image id by running this command:

curl -X GET -H "Content-Type: application/json" -H "Authorization: Bearer <your api key>" "https://api.digitalocean.com/v2/images?private=true" | jq '.images[0].id'You will need that id later to tell the DO provider which image you wish to use to deploy your droplet.

Provision and registration

So now I have the cluster, the ECSAnywhere role and the droplet snapshot.

I will create a one-time registration key pair and write the response into a json file:

aws ssm create-activation --iam-role ECSAnywhereRole --registration-limit 1 | tee ssm-activation.jsonthen copy this file into the instance and use it for the registration. For this operation I have used Terraform provisioners as it is was bit tricky to run the AWS CLI on an "on-premises" platform;

provisioner "file" {

source = "ssm-activation.json"

destination = "~/ecs-anywhere/ssm-activation.json"

}

provisioner "file" {

source = "user-data.sh"

destination = "~/ecs-anywhere/user-data.sh"

}Finally execute the user-data script:

provisioner "remote-exec" {

inline = [

"cd ~/ecs-anywhere && chmod +x user-data.sh && ./user-data.sh",

]

}The user data script will:

load the file

ssm-activation.jsonand convert the properties into variables.ACTIVATION_ID=$(jq -r '.ActivationId' < ssm-activation.json) ACTIVATION_CODE=$(jq -r '.ActivationCode' < ssm-activation.json)Download and run the helper script with the necessary parameters

This script will install and configure all the necessary dependencies: SSM Agent, ECS Agent.sudo bash /tmp/ecs-anywhere-install.sh --region "$REGION" --cluster "$CLUSTER_NAME" --activation-id "$ACTIVATION_ID" --activation-code "$ACTIVATION_CODE"As a final step I think we should delete the json file containing the keys, just to be safe

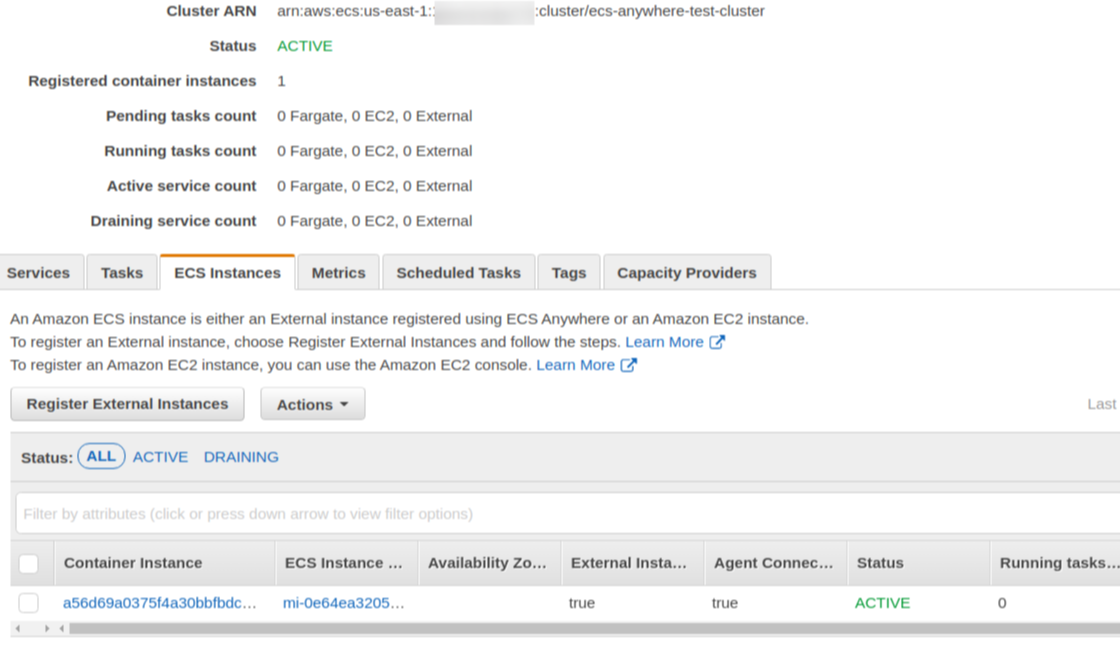

If everything went as planned you should see your instance appearing on the ECS cluster

Deploy the service

Now we can deploy our test service, you can use this Cloudformation template and pass the name of the ecs cluster parameter. This will deploy a classic Nginx server.

If the deployment was successful you should be able to reach your droplet IP at port 80 and receive the Nginx screen, you can also ssh into your VM and see the container running (docker container ls) or navigate to the ECS console and see your service appearing in the list.

Conclusion

Honestly it was easier than expected, at first I was sure it would take more time and effort, but for once AWS did this into a painless process, the script they provide helped a lot with the setup process, for once I was happy with their documentations on the topic, it was clear and straight forward, just a pity to have those limitations, but the service is quite new and I am sure the team working on this project will certainly find a way to overcome those issues. Cheers!